You’ve been there before. You thought you could trust someone with a secret. You thought it would be safe, but found out later that they blabbed to everyone. Or, maybe they didn’t share it, but the way they used it felt manipulative. You gave more than you got and it didn’t feel fair. But now that it’s out there, do you even have control anymore?

Ok. Now imagine that person was your supermarket.

Or your doctor.

Or your boss.

Do you have a personal relationship with technology?

According to research at the Me2B Alliance, people do feel they have a relationship with technology. It’s emotional. It’s embodied. And it’s very personal.

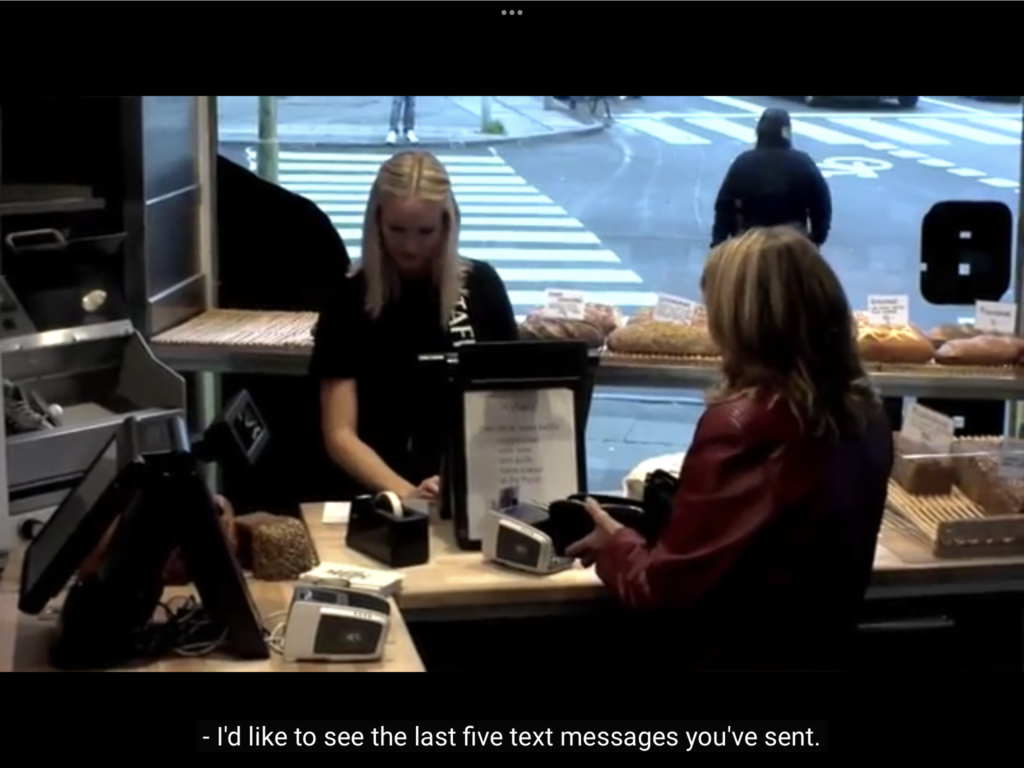

How personal is it? Think about what it would be like if you placed an order at a cafe and they already knew your name, your email, your gender, your physical location, what you read, who you are dating, and that, maybe, you’ve been thinking of breaking up.

We don’t approve of gossipy behavior in our human relationships. So why do we accept it with technology? Sure, we get back some time and convenience, but in many ways it can feel locked in and unequal.

The Me2B Relationship Model

At the Me2B Alliance, we are studying digital relationships to answer questions like “Do people have a relationship with technology?” (They feel that they do). “What does that relationship feel like?” (It’s complicated). And “Do people understand the commitments that they are making when they explore, enter into and dissolve these relationships?” (They really don’t).

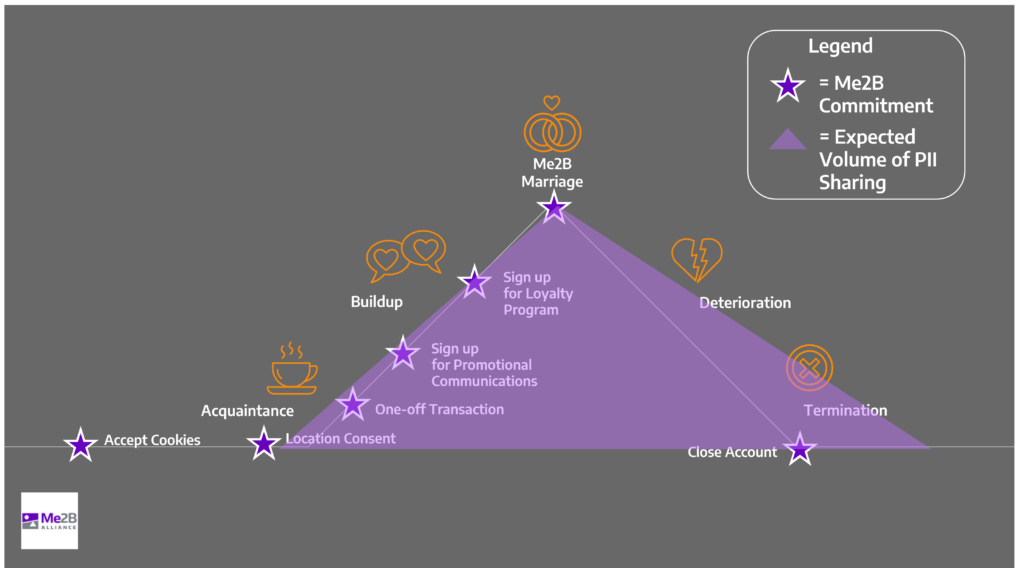

It may seem silly or awkward to think about our dealings with technology as a relationship, but like messy human relationships there are parallels. The Me2BA commitment arc with a digital technology resembles German psychologist George Levenger’s ABCDE relationship model 1, shown by the Orange icons in the image below. As with human relationships, we move through states of discovery, commitment and breakup with digital applications, too.

Our assumptions about our technology relationships are similar to the ones we have about our human ones. We assume when we first meet someone there is a clean slate, but this isn’t always true. There may be gossip about you ahead of your meeting. The other person may have looked you up on LinkedIn. With any technology, information about you may be known already, and sharing that data starts well before you sign up for an account.

The Invisible Parallel Dataverse

Today’s news frequently covers stories of personal and societal harm caused by digital media manipulation, dark patterns and personal data mapping. Last year, Facebook whistleblower Frances Hauser exposed how the platform promotes content that they know from their own research causes depression and self-harm in teenage girls. They know this because they know what teenage girls click, post and share.

Technology enables data sharing at every point of the relationship arc, including after you stop using it. Worryingly, even our more trusted digital relationships may not be safe. The Me2B Alliance uncovered privacy violations in K-12 software, and described how abandoned website domains put children and families at risk when their schools forget to renew them.

Most of the technologies that you (and your children) use have relationships with third party data brokers and others with whom they share your data. Each privacy policy, cookie consent and terms of use document on every website or mobile app you use defines a legal relationship, whether you choose to opt in or are locked in by some other process. That means you have a legal relationship with each of these entities from the moment you accessed the app or website, and in most cases, it’s one that you initiated and agreed to.

All the little bits of our digital experiences are floating out there and will stay out there unless we have the agency to set how that data can be used or shared and when it should be deleted. The Me2B Alliance has developed Rules of Engagement for respectful technology relationships and a Digital Harms Dictionary outlining types of violations, such as:

- Collecting information without the user’s awareness or consent;

- contracts of adhesion, where users are forced to agree with terms of use (often implicitly) when they engage with the content;

- Loss or misuse of personally identifiable information; and

- Unclear or non-transparent information describing the technology’s policies or even what Me2B Deal they are getting.

Respectful technology relationships begin with minimizing the amount of data that is collected in the first place. Data minimization reduces the harmful effects of sensitive data getting into the wrong hands.

Next, we should give people agency and control. Individual control over one’s data is a key part of local and international privacy laws like GDPR in Europe, and similar laws in California, Colorado and Virginia, which give consumers the right to consent to data collection, to know what data of theirs is collected and to request to view the data that was collected, correct it, or to have it permanently deleted.

Three Laws of Safe and Respectful Design

In his short story, I, Robot, Isaac Asimov introduced the famous “Three Laws of Robotics,” an ethical framework to avoid harmful consequences of machine activity. Today, IAs, programmers and other digital creators make what are essentially robots that help users do work and share information. Much of this activity is out of sight and mind, which is in fact how we, the digital technology users, like it.

But what of the risks? It is important as designers of these machines to consider the consequences of the work we put into the world. I have proposed the following corollary to Asimov’s robotics laws:

- First Law: A Digital Creator may not injure a human being or, through inaction, allow a human being to come to harm.

- Second Law: A Digital Creator must obey the orders given by other designers, clients, product managers, etc. except where such orders would conflict with the First Law.

- Third Law: A Digital Creator must protect its own existence as long as such protection does not conflict with the First or Second Law.1

Mike Monteiro in his well-known 2014 talk at An Event Apart on How Designers are Destroying the World discusses the second and third law a lot. While we take orders from the stakeholders of our work—the client, the marketers and the shareholders we design for—we have an equal and greater responsibility to understand and mitigate design decisions that have negative effects.

A Specification for Safe and Respectful Technology

The Me2B Alliance is working on a specification for safe and respectfully designed digital technologies—technologies that Do No Harm. These product integrity tests are conducted by a UX Expert and applied to each commitment stage that a person enters. These stages range from first-open, location awareness, cookie consent, promotional and loyalty commitments, and account creation, as well as the termination of the relationship.

Abby Covert’s IA Principles—particularly Findable, Accessible, Clear, Communicative and Controllable—are remarkably appropriate tests for ensuring that the people who use digital technologies have agency and control over the data they entrust to these products:

Findable: Are the legal documents that govern the technology relationship easy to find? What about support services for when I believe my data is incorrect, or being used inappropriately? Can I find a way to delete my account or delete my data?

Accessible: Are these resources easy to access by both human and machine readers and assistive devices? Are they hidden behind some “data paywall” such as a process that requires a change of commitment state, i.e. a data toll, to access?

Clear: Can the average user read and understand the information that explains what data is required for what purpose? Is this information visible or accessible when it is relevant?

Communicative: Does the technology inform the user when the commitment status changes? For example, does it communicate when it needs to access my location or other personal information like age, gender, medical conditions? Does it explain why it needs my data and how to revoke data access when it is no longer necessary?

Controllable: How much control do I have as a user? Can I freely enter into a Me2B Commitment or am I forced to give up some data just to find out what the Me2B Deal is in the first place?

Abby’s other IA principles flow from the above considerations. A Useful product is one that does what it claims to do and communicates the deal you get clearly and accessibly. A Credible product is one that treats the user with respect and communicates its value. With user Control over data sharing and a clear understanding of the service being offered, the true Value of the service is apparent.

Over time the user will come to expect notice of potential changes to commitment states and will have agency over making that choice. These “Helpful Patterns”—clear and discoverable notice of state changes and opt-in commitments—build trust and loyalty, leading to a Delightful, or at least a reassuring, experience for your users.

What I’ve learned from working in the standards world is that Information Architecture Principles provide a solid framework for understanding digital relationships as well as structuring meaning. Because we aren’t just designing information spaces. We’re designing healthy relationships.

1 Levinger, G. (1983). “Development and change.” In H.H. Kelley et al. (Eds.), Close relationships (315–359). New York: W. H. Freeman and Company. https://www.worldcat.org/title/close-relationships/oclc/470636389

2 Asimov, I. (1950). I, Robot. Gnome Press.