Where would we be without rating and reputation systems these days? Take them away, and we wouldn’t know who to trust on eBay, what movies to pick on Netflix, or what books to buy on Amazon. Reputation systems (essentially a rating system for people) also help guide us through the labyrinth of individuals who make up our social web. Is he or she worthwhile to spend my time on? For pity’s sake, please don’t check out our reputation points before deciding whether to read this article.

Rating and reputation systems have become standard tools in our design toolbox. But sometimes they are not well-understood. A recent post at the IxDA forum showed confusion about how and when to use rating systems. Much of the conversation was about whether to use stars or some other iconography. These can be important questions, but they miss the central point of ratings systems: to manage risk.

So, when we think about rating and reputation systems, the first question to ask is not, “Am I using stars, bananas, or chili peppers?” but, “what risk is being managed?”

What Is Risk?

We desire certainty in our transactions. It’s just our nature. We want to know that the person we’re dealing with on eBay won’t cheat us. Or that Blues Brothers 2000 is a bad movie (1 star on Netflix). So risk, most simply (and broadly), arises when a transaction has a number of possible outcomes, some of which are undesirable, but the precise outcome cannot be determined in advance.

Where Does Risk Come From?

There are two main sources of risk that are important for rating and reputation systems: asymmetric information and uncertainty.

Asymmetric information arises when one party to a transaction can not completely determine in advance the characteristics of the other party, and this information cannot credibly be communicated. The main question here is: can I, the buyer, trust you, the seller, to honestly complete the transaction we’re going to engage in? That means: will you take my money and run? Did you describe what you’re selling accurately? And so on.

This unequal distribution of information between buyers and sellers is a characteristic of most transactions, even in transactions where fraud is not a concern. Online transactions make asymmetric information problems worse. No longer can we look the seller in the eye and make a judgment about their honesty. Nor can we physically inspect what we’re buying and get a feel of its quality. We need other ways to manage our risk generated by asymmetric information.

The other source of risk is not knowing beforehand whether we’ll like the thing we’re buying. Here honesty and quality are not the issue, but rather our own personal tastes and the nature of the thing we’re buying. Movies, books, and wine are examples of experience goods, which we need to experience before we know their true value. For example, we’re partial to red wine from Italy, but that doesn’t mean we’ll like every bottle of Italian red wine we buy.

Managing Risk with Design

Among the ways to manage risk, two methods will be of interest to user experience designers:

- Signaling is where participants in a transaction communicate something meaningful about themselves.

- Reducing information costs involves reducing the time and effort it takes participants in a transaction to get meaningful information (such as: is this a good price? is this a quality good?).

Reputation systems tend to enable signaling and are best utilized in evaluating people’s historical actions. In contrast, rating systems are a way of leveraging user feedback to reduce information costs and are best utilized in evaluating standard products or services.

It is important to note that reputation systems are not the only way to signal (branding and media coverage are other means, among others), and rating systems are not the only means of reducing information costs (better search engines and product reviews also help, for example). But these two tools are becoming increasingly important, as they provide quick reference points that capture useful data.

As we review various aspects of rating and reputation systems, the key questions to keep in mind are:

- Who is doing the rating?

- What, exactly, is being rated?

- If people are being rated, what behaviors are we trying to encourage or discourage?

Who is doing the Rating?

A random poll of several friends shows about half use the Amazon rating system when buying books and the other half ignore it. Why do they ignore it? Because they don’t know whether the people doing the rating are crackpots or if they have similar tastes to them.

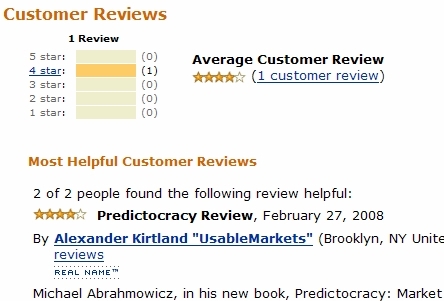

Amazon has tried to counteract some of these issues by using features such as “Real Name” and “helpfulness” ratings of the ratings themselves (see Figure 1).

Figure 1: Amazon uses real names and helpfulness to communicate honesty of the review.

This is good, but requires time to read and evaluate the ratings and reviews. It also doesn’t answer the question, how much is this person like me?

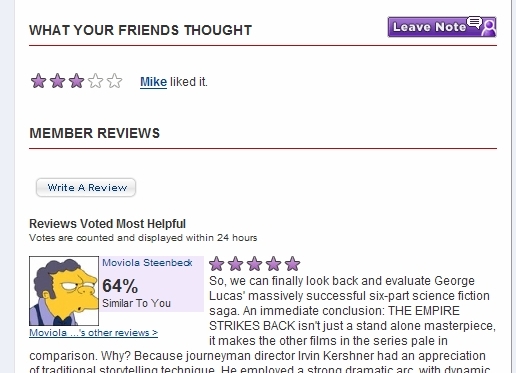

Better is Netflix’s system (Figure 2), which is explicit about finding people like you, be they acknowledged friends or matched by algorithm.

Figure 2: Netflix lets you know what people like you thought of a movie.

Both these systems implicitly recognize that validation of the rating system itself is important. Ideally users should understand three things about the other people who are doing the rating:

- Are they honest and authentic?

- Are they like you in a way that is meaningful?

- Are they qualified to adequately rate the good or service in question?

The last point is important. While less meaningful for rating systems of some experience goods (we’re all movie experts, after all), it is more important for things we understand less well. For example, while we might be able to say whether a doctor is friendly or not, we may be less able to fairly evaluate a doctor’s medical skills.

What is being rated?

Many rating systems are binary (thumbs up, thumbs down), or scaled (5 stars, 5 chili peppers, etc.), but this uni-dimensionality is inappropriate for complicated services or products which may have many characteristics.

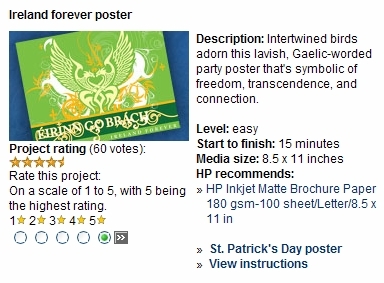

For example, Figure 3 depicts a rating system from the HP Activity Center and shows how not to do a rating system. Users select a project that interests them (e.g., how to make an Ireland Forever poster) and then complete it using materials they can purchase from HP (e.g., paper). A rating system is included, presumably to help you decide which project you should undertake in your valuable time.

Figure 3: The rating system on the HP Activity Center site: what not to do.

A moment’s reflection raises the following question: what is being rated? The final outcome of the project? The clarity of the instructions? How fun this project is? We honestly don’t know. Someone thoughtlessly included this rating system.

Good rating systems also don’t inappropriately “flatten” the information that they collect into a single number. Products and services can have many characteristics, and not being clear on what characteristics are being rated, or inappropriately lumping all aspects into a single rating, is misleading and makes the rating meaningless.

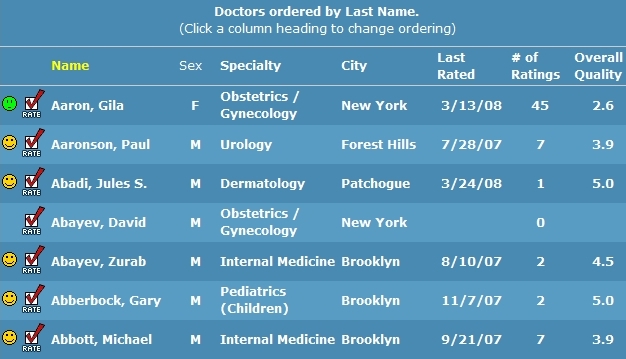

RateMDs, a physician rating site, uses a smiley face to tell us about how good the doctor is (Figure 4).

Figure 4: RateMDs.com rating system for doctors.

Simple? Yes. Appropriate? Perhaps not.

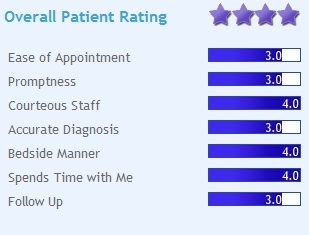

Better is Vitals, a physician rating site that includes information about doctors’ years of experience, any disciplinary actions they might have, their education, and a patient rating system (Figure 5).

Figure 5: The multi-dimensional rating system on Vitals.com.

While Vitals has an overall rating, more important are the components of the system. Each variable – ease of appointment, promptness, etc. – reflects a point of concern that helps to evaluate physicians.

When rating experiences, what is being rated is relatively clear. Did you enjoy the experience of consuming this good or not? Rating physical goods and products can be more complicated. An ad hoc analysis of Amazon’s rating system (Figure 6) should help explain.

Figure 6: Amazon’s rating system is not always consistent.

In this example the most helpful favorable and unfavorable reviews are highlighted. However, each review is addressing different variables. The favorable review talks about how easy it is to set up this router, while the unfavorable review talks about the lack of new features. These reviews are presented as comparable, but they are not. These raters were thinking about different characteristics of the router.

The point here is that rating systems need to be appropriate for the goods or services that are being rated. A rating system for books cannot easily be applied to a rating system for routers, since the products are so entirely different in how we experience them. What aspects we rate need to be carefully selected, and based on the characteristics of the product or service being rated.

What behaviors are we trying to encourage?

Any rating of people is essentially a reputation system. Despite some people’s sensitivity to being rated, reputation systems are extremely valuable. Buyers need to know whom they can trust. Sellers need to be able to communicate – or signal based on their past actions – that they are trustworthy. This is particularly true online, where it’s common to do business with someone you don’t know.

But designing a good reputation system is hard. eBay’s reputation system has had some problems, such as the practice of “defensive rating” (rate me well and I’ll rate you well; rate me bad and I’ll rate you worse). This defeats the purpose of a rating system, since it undermines the honesty of the people doing the rating, and eBay has had to address this flaw in their system. What started out as an open system now needs to permit anonymous ratings in order to save the reputation (as it were) of the reputation system.

While designing a good reputation system is hard, it’s not impossible. There are five key things to keep in mind when designing a reputation system:

1. List the behaviors you want to encourage and those that you want to discourage

It’s obvious what eBay wants to encourage (see Figure 7). A look at a detailed ratings page shows they want sellers to describe products accurately, communicate well (and often), ship in a reasonable time, and not charge unreasonably for shipping. (Not incidentally, you could also view these dimensions as source of risk in a transaction.)

Figure 7: eBay encourages good behavior.

2. Be transparent

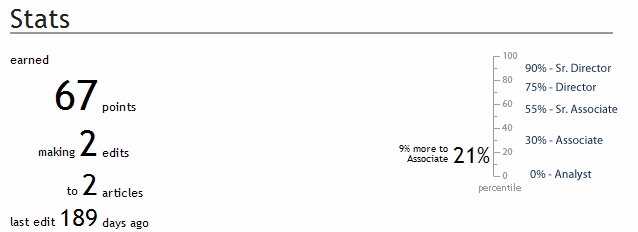

Once you know the behaviors you want to encourage, you then need to be transparent about it. Your users need to know how they are being rated and on what basis. Often a reputation is distilled into a single number — say, reputation points — but it is impossible to look at a number and derive the formula that produced it. While Wikinvest (Figure 8) doesn’t show a formula (which would be ideal), they do indicate what actions you took to receive your point total.

Figure 8: Wikinvest’s reputation system

Any clarity that is added to a reputation system will make your users happy, and it will make them more likely to behave in the manner you desire.

3. Keep your reputation system flexible

Any scoring system is open to abuse, and chances are that any reputation system you design will be abused in imaginative ways that you can’t predict. Therefore it’s important to keep your system flexible. If people begin behaving in ways that enhance their reputation but don’t enhance the community, the reputation system needs to be adjusted.

Changing the weighting of certain behaviors is one way to adjust your system. Adding ratings (or points) for new behaviors is another. The difficulty here will be in keeping everything fair. People don’t like a shifting playing field, so tweaks are better than wholesale changes. And changes should also be communicated clearly.

4. Avoid negative reputations

When possible, reputation systems should also be non-negative towards the individual. While negative reputations are important to protect people, negative reputations should not always be emphasized. This is specifically true in community sites where users generate much of the content, and not much is at stake (except perhaps your prestige).

Looking at our example above (Figure 8), Wikinvest uses the term “Analyst” (a nice, non-offensive term … if you’re not in investment banking), to mean, “this person isn’t really contributing much.”

5. Reflect reality

Systems sometimes fail on community sites when people belong to multiple communities and their complete reputations are not contained within any one of them. While there are exceptions, allowing reputations earned elsewhere to be imported can be a smart way to bring your system in line with reality and increase the value of information that it provides.

Conclusion

Our discussion of rating systems and reputation systems is certainly incomplete. We do hope that we’ve given a good description of risk in online transactions, and how understanding this can help user experience designers better manage risk via the design of more robust rating and reputation systems.

In addition, we’d like to begin a repository of rating and reputation systems. If you find any that you’d like to share, feel free to submit them at http://101ratings.com/submit.php.

Alex and Aaron,

Thanks for a thorough look at this subject. I agree that the system used for rating should be created with the particular product or service in mind, as there are variables appropriate to one that will not be appropriate to others. This is one reason why the effort of the reviewer, especially whether he or she has taken the time to write clear and comprehensive comments in addition to any numeric rating system that is available, is so important to the person viewing the rating later. As you noted, one customer may give a product a low rating due to a particular expectation he or she had for it, while another may give the same product a high rating based upon a completely different expectation. Having access to comments or an explanation helps the prospective customer discern the importance of certain reviews to his or her decision.

One “genre” of rating systems for which this idea is applicable is the dining-related ratings site (like yelp.com or foodbuzz.com). I’ve often noted that I might read a disparaging review that gives low marks for having a limited menu and think, “that sounds like my kind of place- why would someone consider a limited menu a bad thing?” Interestingly, many users only need one not-so-good dining experience to prompt them to submit a negative review, whereas positive reviews might come from long-time loyal customers. Given that any restaurant’s service can be affected by many unpredictable factors, a measured reviewer should take into consideration whether it is their first visit, whether their order was a specialty of the restaurant, the experience of the waiter, etc. Thankfully, most websites dedicated to rating particular services would have considered specific attributes (i.e. wait time, menu scope, wait staff, etc. for restaurants, as apposed to format, page count, dimensions, etc. for books) in order to make sure that the overall presentation of any given business/product is, in general, accurate. As you mentioned, this can become a problem on sites like ebay.com, where the user is in full control over the portrayal of what is being sold. Perhaps Amazon.com has found a good fusion in this regard in the way it allows users to sell used versions of books already in their inventory?

Chris

Great article! But can you or someone talk about what to do in the case where a ratings system generates too many 4s or 5s, resulting in a problem for users to determine the minute difference between the two? Thanks!

Really interesting article. Thanks for giving me the concept of understanding the user value of ratings as a risk management tool! That is a simple and powerful insight.

However, to follow on to David Shen’s comment, I wish you’d included a bit more discussion on how the risk management perspective can instruct the design of the ratings display. Some sites are using histograms to provide a bit more richness for interpreting the rating. But to David’s point, even if I can see the distribution, how do I know if I am more like the group who gave 4 stars, or the group who gave 5? And how do I relate the difference between 4 and 5 to my purchase risk?

Great article, I also recommend Chris Allen’s awesome series on rating systems http://www.lifewithalacrity.com/2005/12/collective_choi.html

In particular, he looks at the problem with bianary distribution or ratings (too many 1’s and 5’s) and how to use weighting to help create a more accurate and fine-toothed rating (poor B&A could use some of this thinking rolled into our system).

Also related is the brilliant Bernardo Huberman’s research, in particular his work with voting sites

http://www.hpl.hp.com/research/idl/papers/opinion_expression/

His Parc Forum talk is very enlightening as well

http://www.parc.com/cms/get_article.php?id=703

Thank you for a great article. Rating systems have definitely helped put some of the power into the hands of the people but like you said, they can be flawed and implemented poorly. I appreciate your effort to help offer some thoughts about best practices.

A couple of things occurred to me while I read this article:

“Real Names increases likelihood of Responsible Content”

I was struck by your comment about Amazon’s use of “Real Names” to promote social responsibility in the rating system. This assumes that the old saw that anonymity on the web fosters poor behavior. I believed the same thing until I saw a few studies that have shown that ‘real names’ does not do much to improve online social graces. I guess the take away is if others perceive that a rating by a user with a ‘real name’ is more reliable, so much the better but in terms of data collection, it may not make much difference.

One research report – http://www.guardian.co.uk/technology/2007/jul/12/guardianweeklytechnologysection.privacy

“Finding an Appropriate Rating Instrument”

Your point about appropriate rating systems is important. Everyone has “5 stars” on the brain – from iTunes to Amazon to Netflix and beyond. It’s great for a simple interface design but often it’s woefully inappropriate for the context it’s being used for. Some things are just too complicated to reduce to a single representative number.

I recently had a similar conversation concerning Likert scales on political surveys. Many of the hot-topic issues are so nuanced that a 5 point scale doesn’t really allow me to express my thoughts accurately ( Do I “somewhat support” abortion? What does that even mean?).

Creating an appropriate measuring instrument is just as important as making the decision to ask for rating feedback in the first place.

Thanks for a useful article. Risk management is an interesting lens through which to examine rating systems. I think it largely works. In addition, I think persuasion is a helpful lens because rating systems are trying to persuade users that the risk is worth taking or there is little risk. In persuasion, the credibility of the speaker / author is a significant factor in his or her ability to influence. The question is what determines credibility in the eyes of the user / customer? Looking at that from the perspective of different persuasion theories would be interesting. Also, ratings systems have much persuasive potential because they include a mix of rational and emotional appeals. The rating itself, especially an aggregate rating, comes across as objective and appeals to our rational side. The comments are more subjective and appeal more to our emotional side, as well as help us determine the speaker’s credibility.

I disagree with your assessment of Amazon’s “Most helpful positive and negative reviews” feature. It’s not important that the two reviewers aren’t judging the product by the same metrics. In fact, I’d say it’s desirable. The questions that this answers are “What things did a trustworthy person like?” and “What things did a trustworthy person not like?” Then, the potential buyer can compare the priorities of those two reviewers to their own and decide which factors they think are most important. This mirrors real life. I ask multiple people their opinions about an important decision so that I can see how different people think about the same problem.

Nice overview.

I’m curious about “defensive rating” and how eBay solved it… is rating still limited to registered, signed-in members, but they can now rate anonymously? Or does rating no longer require sign-in? Thanks.

@David Shen: I wonder if too many 4s and 5s is really a problem… anything on this end of the scale is high-quality and worthy of users’ attention… they can further decide based on other indicators. Now, if the aggregated rating is the only way to determine appropriateness, that would be a problem. I don’t think it’s powerful & relevant enough as a stand-alone feature, but it helps. As mentioned in the article, ratings become more powerful when *combined* with written reviews (for example).

Great writing

Very interesting, but I think your article, while the title didn’t imply it, focused mostly on the use of rating systems in mitigating risk for e-commerce sites, for instance, but not for social media sites. I can understand, and it seems rather easy – when anonymous, or even named, users, are rating on something relatively objective – a movie, or an artist, or a song or a book. What about user-generated content, when authors/users trade reputation and ratings for friendship, thus skewing effective use of ratings at all – if I publish an article, and all my friends rate it a 10 on a scale of 1-10, and every other article gets a 10, 10 has no meaning.

I notice a number of IAs out there designing rating systems for social media systems, social networks, and user generated media, and are either completely ignorant of the psycho-social aspects, or willfully ignoring the sociology and psychology of ‘members’ using social media tools to mediate conversations and create content.

Thanks for all these great comments, observations, questions, and recommendations for reading. We most certainly didn’t cover all aspects of rating and reputation systems, nor went as deep as we would have liked. There is the whole psychological aspect of rating that we didn’t address that’s very important, particularly that people are influenced by ratings they’ve just made (if I rate something a 5 I’m more likely to rate the next thing I see a 5), and how others rate that thing.

The story of eBay’s rating system doesn’t seem to have been documented very well anywhere, but it pretty fascinating, especially in trying to solve the practice of defensive rating.

Rating in a social environment is also something that deserves more attention. A good place to start might be here: http://www.slideshare.net/soldierant/designing-your-reputation-system/

One thing that we avoided (on purpose) was how the information design of the rating system should look (5 stars, grades, etc etc etc). It’s a good discussion, but we wanted to focus only on the risk side of rating and reputation systems. Perhaps that’s another article ….

~alex

In my experience I have found that Amazon’s approach, which must cover a wide range of scenarios for products does a good job of giving an the overall picture of a product. Reading great and poor reviews side-by-side helps me to decide whether a product may be what I am looking for or has problems I did not know about, or lack features that were in a different product. The things that do throw off ratings are when users rant about things that are not related to the product, but the service or even the delivery guy.

Alex, I found this article a great starting point in thinking about the mechanics of rating systems which I regularly use (benefit from) but generally don’t engage in. Just recently I have been asked to think about the design of a reputation engine. Now I know that your article is mostly aimed at buyers and sellers in a transaction but the point about risk does have some extension into social media too. However I would be interested in Christina’s view about the recommendation number 4 – ‘Avoid negative reputations’.

Do you mean by this that we should avoid reputations going negative as in ‘-3’, or we should avoid rating and reputation systems which allow negative comment full stop.

I notice that this site allows negative reputations (and seeing as I am currently on 0 at the point of writing this there is a danger I might go there too). But also it allows the attributed rating of 1/5 or ‘Yuck!’ which is negative in all but numerical value. This site then encourages negative comment and negative reputation where it is warranted. This obviously runs counter to your advice. What do you think about this?

I’ve found in my dealing with some of my clients that the User Ratings are only as good as the people who write them. While some or be that, most are good, it really only takes a few bad Apples to spoil the whole bunch and that whole bunch being new and prospecting clients who may be looking for what you’re offering.

So it really depends on what you’re using the rating for, what’s it’s purpose, what’s the end goal you’re looking to achieve by using a rating system?

All in all, this was a great Article and I would recommend it to anyone interested in understanding User Generated rating systems.

Check out some of our work at http://www.fiswebdesign.com a Dallas Web Design company..

I really useful article. I think you have discussed a very important issue and it’s something E-Commerce sites need to look into more.

I concur with this post…” It’s not important that the two reviewers aren’t judging the product by the same metrics. In fact, I’d say it’s desirable. ”

The issue with the ratings is the perspective and in my experience it can be accomodated with a little effort.

Consider a datacube used in analytics where the perspective can be changed at will.

The way I approach it is by using stories and Metics with 5 dimensions–Story, Process, Software, Brand and Metrics.

Either google Story Lens and Neuropersona or visit my site if measuring from multiple perspectives would help.

Cheers,

Nick

http://www.neuropersona.com

It’s the Content not the Reputation

Currently valuing reputation depends entirely on the perspective of the judges who value the contribution whether it is one person or thousands and the problem here is that a single judges perspective will be different than every reader and more judges will hold perspective that may appear close to but will not fit any reader, past current or future.

Going back to first principals for the value behind reputation systems may help. Reputation confirms content value.

While reputation systems appear to help focus on content from a trusted source, one with a good reputation, it is important to note the command and control nature of the system. The judge rules, context is fixed.

Take any story with a low reputation value and you will find any number of readers who rank it very high. Why?

It fits their context and objectives, which were apparently unimportant to the judge at time of rank pronouncement.

Are reputation systems helpful? Maybe.

On the other hand it appears to me that they are another filter and should either work with common filters such as search engines or context engines rather thant being held to high esteem.

Would you place more importance on a reputation system than a google ranking?

Cheers,

Nick

http://www.neuropersona.com

great article. thanks for looking at this indetail!

it’s not that closely related, but in this articel about voting system (focusing on political elections) the authors also present some interesting findings about rating methods:

http://www.newscientist.com/article/mg19826511.600-why-firstpastthepost-voting-is-flawed.html

cheers m

Great article. The economists perspective perfectly describes the heart of the matter.

I recently did a consumer survey for an application we are building for the one industry. One of the interesting results was that only 24% of people said they at least somewhat understood the existing 100 point rating system for wine. Additionally, 48% of people say they never use online rating systems to make purchasing decisions.

I think what this tells me is that, some people (maybe about a quarter of the population) find rating systems irrelevant to their decision making. It’s just not how their wired. Moreover, some who use rating systems will be more intrigued by the individual comments than the overall numerical rating. A rating system is part of a larger system, and the larger system needs to take this into account.

Great overview. Well very thought and nicely put article. Sites must implement and design the rating systems very carefully for the reason that they can be flawed or exploited. However, if its well implemented they can be great for the end users.

Yes,this is quite possible