A big part of information architecture is organisation – creating the structure of a site. For most sites – particularly large ones – this means creating a hierarchical “tree” of topics.

But to date, the IA community hasn’t found an effective, simple technique (or tool) to test site structures. The most common method used — closed card sorting — is neither widespread nor particularly suited to this task.

Some years ago, Donna Spencer pioneered a simple paper-based technique to test trees of topics. Recent refinements to that method, some made possible by online experimentation, have now made “tree testing” more effective and agile.

How it all began

Some time ago, we were working on an information-architecture project for a large government client here in New Zealand. It was a classic IA situation – their current site’s structure (the hierarchical “tree” of topics) was a mess, they knew they had outgrown it, and they wanted to start fresh.

We jumped in and did some research, including card-sorting exercises with various user groups. We’ve always found card sorts (in person or online) to be a great way to generate ideas for a new IA.

Brainstorming sessions followed, and we worked with the client to come up with several possible new site trees. But were they better than the old one? And which new one was best? After a certain amount of debate, it became clear that debate wasn’t the way to decide. We needed some real data – data from users. And, like all projects, we needed it quickly.

What kind of data? At this early stage, we weren’t concerned with visual design or navigation methods; we just wanted to test organisation – specifically, findability and labeling. We wanted to know:

* Could users successfully find particular items in the tree?

* Could they find those items directly, without having to backtrack?

* Could they choose between topics quickly, without having to think too much (the Krug Test)1?

* Overall, which parts of the tree worked well, and which fell down?

Not only did we want to test each proposed tree, we wanted to test them against each other, so we could pick the best ideas from each.

And finally, we needed to test the proposed trees against the existing tree. After all, we hadn’t just contracted to deliver a different IA – we had promised a better IA, and we needed a quantifiable way to prove it.

The problem

This, then, was our IA challenge:

* getting objective data on the relative effectiveness of several tree structures

* getting it done quickly, without having to build the actual site first.

As mentioned earlier, we had already used open card sorting to generate ideas for the new site structure. We had done in-person sorts (to get some of the “why” behind our users’ mental models) as well as online sorts (to get a larger sample from a wider range of users).

But while open card sorting is a good “detective” technique, it doesn’t yield the final site structure – it just provides clues and ideas. And it certainly doesn’t help in evaluating structures.

For that, information architects have traditionally turned to closed card sorting, where the user is provided with predefined category “buckets” and ask to sort a pile of content cards into those buckets. The thinking goes that if there is general agreement about which cards go in which buckets, then the buckets (the categories) should perform well in the delivered IA.

The problem here is that, while closed card sorting mimics how users may file a particular item of content (e.g. where they might store a new document in a document-management system), it doesn’t necessarily model how users find information in a site. They don’t start with a document — they start with a task, just as they do in a usability test.

What we wanted was a technique that more closely simulates how users browse sites when looking for something specific. Yes, closed card sorting was better than nothing, but it just didn’t feel like the right approach.

Other information architects have grappled with this same problem. We know some who wait until they are far enough along in the wireframing process that they can include some IA testing in the first rounds of usability testing. That piggybacking saves effort, but it also means that we don’t get to evaluate the IA until later in the design process, which means more risk.

We know others who have thrown together quick-and-dirty HTML with a proposed site structure and placeholder content. This lets them run early usability tests that focus on how easily participants can find various sublevels of the site. While that gets results sooner, it also means creating a throw-away set of pages and running an extra round of user testing.

With these needs in mind, we looked for a new technique – one that could:

* Test topic trees for effective organisation

* Provide a way to compare alternative trees

* Be set up and run with minimal time and effort

* Give clear results that could be acted on quickly

The technique — tree testing

Luckily, the technique we were looking for already existed. Even luckier was that we got to hear about it firsthand from its inventor, Donna Spencer, the well-regarded information architect out of Australia, and author of the recently released book “Card Sorting”:http://rosenfeldmedia.com/books/cardsorting/.

During an IA course that Donna was teaching, she was asked how she tested the site structures she created for clients. She mentioned closed card sorting, but like us, she wasn’t satisfied with it.

She then went on to describe a technique she called “card-based classification”:http://www.boxesandarrows.com/view/card_based_classification_evaluation, which she had used on some of her IA projects. Basically, it involved modeling the site structure on index cards, then giving participants a “find-it” task and asking them to navigate through the index cards until they found what they were looking for.

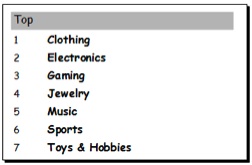

To test a shopping site, for example, she might give them a task like “Your 9-year-old son asks for a new belt with a cowboy buckle”. She would then show them an index card with the top-level categories of the site:

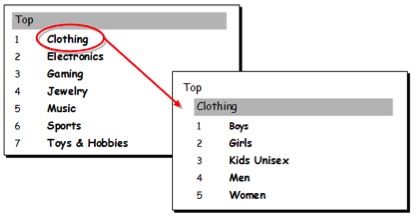

The participant would choose a topic from that card, leading to another index card with the subtopics under that topic.

The participant would continue choosing topics, moving down the tree, until they found their answer. If they didn’t find a topic that satisfied them, they could backtrack (go back up one or more levels). If they still couldn’t find what they were looking for, they could give up and move on to the next task.

During the task, the moderator would record:

* the path taken through the tree (using the reference numbers on the cards)

* whether the participant found the correct topic

* where the participant hesitated or backtracked

By choosing a small number of representative tasks to try on participants, Donna found that she could quickly determine which parts of the tree performed well and which were letting the side down. And she could do this without building the site itself – all that was needed was a textual structure, some tasks, and a bunch of index cards.

Donna was careful to point out that this technique only tests the top-down organisation of a site and the labeling of its topics. It does not try to include other factors that affect findability, such as:

* the visual design and layout of the site

* other navigation routes (e.g. cross links)

* search

While it’s true that this technique does not measure everything that determines a site’s ease of browsing, that can also be a strength. By isolating the site structure – by removing other variables at this early stage of design – we can more clearly see how the tree itself performs, and revise until we have a solid structure. We can then move on in the design process with confidence. It’s like unit-testing a site’s organisation and labeling. Or as my colleague Sam Ng says, “Think of it as analytics for a website you haven’t built yet.”

So we built Treejack

As we started experimenting with “card-based classification” on paper, it became clear that, while the technique was simple, it was tedious to create the cards on paper, recruit participants, record the results manually, and enter the data into a spreadsheet for analysis. The steps were easy enough, but they were time eaters.

It didn’t take too much to imagine all this turned into a web app – both for the information architect running the study and the participant browsing the tree. Card sorting had gone online with good results, so why not card-based classification?

Ah yes, that was the other thing that needed work – the name. During the paper exercises, it got called “tree testing”, and because that seemed to stick with participants and clients, it stuck with us. And it sure is a lot easier to type.

To create a good web app, we knew we had to be absolutely clear about what it was supposed to do. For online tree testing, we aimed for something that was:

* Quick for an information architect to learn and get going on

* Simple for participants to do the test

* Able to handle a large sample of users

* Able to present clear results

We created a rudimentary application as a proof of concept, running a few client pilots to see how well tree testing worked online. After working with the results in Excel, it became very clear which parts of the trees were failing users, and how they were failing. The technique worked.

However, it also became obvious that a wall of spreadsheet data did not qualify as “clear results”. So when we sat down to design the next version of the tool – the version that information architects could use to run their own tree tests – reworking the results was our number-one priority.

Participating in an online tree test

So, what does online tree testing look like? Let’s look at what a participant sees.

Suppose we’ve emailed an invitation to a list of possible participants. (We recommend at least 30 to get reasonable results – more is good, especially if you have different types of users.) Clicking a link in that email takes them to the Treejack site, where they’re welcomed and instructed in what to do.

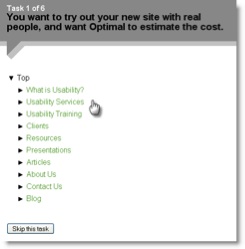

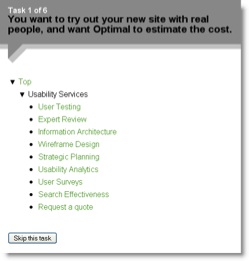

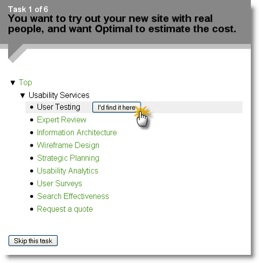

Once they start the test, they’ll see a task to perform. The tree is presented as a simple list of top-level topics:

They click down the tree one topic at a time. Each click shows them the next level of the tree:

Once they click to the end of a branch, they have 3 choices:

* Choose the current topic as their answer (“I’d find it here”).

* Go back up the tree and try a different path (by clicking a higher-level topic).

* Give up on this task and move to the next one (“Skip this task”).

Once they’ve finished all the tasks, they’re done – that’s it. For a typical test of 10 tasks on a medium-sized tree, most participants take 5-10 minutes. As a bonus, we’ve found that participants usually find tree tests less taxing than card sorts, so we get lower drop-out rates.

Creating a tree test

The heart of a tree test is…um…the tree, modeled as a list of text topics.

One lesson that we learned early was to build the tree based on the content of the site, not simply its page structure. Any implicit in-page content should be turned into explicit topics in the tree, so that participants can “see” and select those topics.

Also, because we want to measure the effectiveness of the site’s topic structure, we typically omit “helper” topics such as Search, Site Map, Help, and Contact Us. If we leave them in, it makes it too easy for users to choose them as alternatives to browsing the tree.

Devising tasks

We test the tree by getting participants to look for specific things – to perform “find it” tasks. Just as in a usability test, a good task is clear, specific, and representative of the tasks that actual users will do on the real site.

How many tasks? You might think that more is better, but we’ve found a sizable learning effect in tree tests. After a participant has browsed through the tree several times looking for various items, they start to remember where things are, and that can skew later tasks. For that reason, we recommend about 10 tasks per test, presented in a random sequence.

Finally, for each task, we select the correct answers – 1 or more tree topics that satisfy that task.

The results

So we’ve run a tree test. How did the tree fare?

At a high level, we look at:

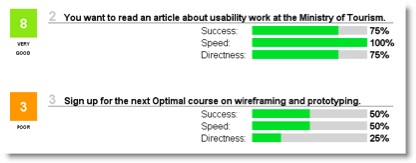

* Success – % of participants who found the correct answer. This is the single most important metric, and is weighted highest in the overall score.

* Speed – how fast participants clicked through the tree. In general, confident choices are made quickly (i.e. a high Speed score), while hesitation suggests that the topics are either not clear enough or not distinguishable enough.

* Directness – how directly participants made it to the answer. Ideally, they reach their destination without wandering or backtracking.

For each task, we see a percentage score on each of these measures, along with an aggregate score (out of 10):

If we see an overall score of 8/10 for the entire test, we’ve earned ourselves a beer. Often, though, we’ll find ourselves looking at a 5 or 6, and realise that there’s more work to be done.

The good news is that our miserable overall score of 5/10 is often some 8’s and 9’s brought down by a few 2’s and 3’s. This is where tree testing really shines — separating the good parts of the tree from the bad, so we can spend our time and effort fixing the latter.

To do more detailed analysis on the low scores, we can download the data as a spreadsheet, showing destinations for each task, first clicks, full click paths, and so on.

In general, we’ve found that tree-testing results are much easier to analyse than card-sorting results. The high-level results pinpoint where the problems are, and the detailed results usually make the reason plain. In cases where a result has us scratching our heads, we do a few in-person tree tests, prompting the participant to think aloud and asking them about the reasons behind their choices.

Lessons learned

We’ve run several tree tests now for large clients, and we’re very pleased with the technique. Along the way, we’ve learned a few things too:

* Test a few different alternatives. Because tree tests are quick to do, we can take several proposed structures and test them against each other. This is a quick way of resolving opinion-based debates over which is better. For the government web project we discussed earlier, one proposed structure had much lower success rates than the others, so we were able to discard it without regrets or doubts.

Conclusion

Tree testing has given us the IA method we were after – a quick, clear, quantitative way to test site structures. Like user testing, it shows us (and our clients) where we need to focus our efforts, and injects some user-based data into our IA design process. The simplicity of the technique lets us do variations and iterations until we get a really good result.

Tree testing also makes our clients happy. They quickly “get” the concept, the high-level results are easy for them to understand, and they love having data to show their management and to measure their progress against.

You can sign up for a free Treejack account at “Optimal Workshop”:http://www.optimalworkshop.com/treejack.htm.2

References

1. “Don’t Make Me Think”:http://www.amazon.com/Dont-Make-Me-Think-Usability/dp/0321344758, Steve Krug

2. Full disclosure: As noted in his “bio”:http://boxesandarrows.wpengine.com/person/35384-daveobrien, O’Brien works with Optimal Workshop.

Hi Dave,

an excellent article, thank you very much for discussing this in detail. I often find that information architects are a little too complacent when they only do a card-sort in the beginning and then base all their later decisions on the assumptions gained from this initial exercise. Your approach is a nice evaluation tool, and I would love to see it!

There are a few points I wanted to raise:

1.) I often find that with information hierarchies, even a subtle difference in the way you phrase the task can lead to very different outcomes. A verb or adjective in another place can jog a participant’s memory, suddenly “solving” a problem… that’s why I’m a little cautious when you said that 10 tasks were enough. I know that participants get bored really quickly with these tasks, but only 10 tasks can potentially skew the results by relying more on a clever way of posing the question, rather than a good way of presenting the answer. I hope this makes sense.

2.) I think it would be great if your tool could show the results graphically. The great thing about tree hierarchies is that they are so easy to display – and if you then mapped user paths (for example with different colours) over such a tree hierarchy graphic, it might be easier to come to some conclusions. For example, imagine that 5 out of 20 users went down the wrong path for a certain task, but they all went down the same wrong path, the result graphic could show this effectively. The IA could then make a decision to either re-model the structure, or decide on inserting a cross-link in the form of “You might have been looking for this…”

3.) Another useful piece of functionality for your tool would be a register for the “points of incorrect decisions”. Once users are finished with the whole test, the program could display the screens where users went down a different path than what the information architecture had laid out for them. It could show that screen, circle the user decision as “This is what you chose”, and circle the “correct” one as “This is what we had in mind”. You could then ask users what didn’t work for them with your assumptions, and they might actually come up with some pretty good reasons like “I didn’t know what your word meant” or some rather mundane ones, like “Oh, I didn’t see that one.” This might be possible for online tests, too, but I’m rather thinking about the usefulness for a facilitator in a face-to-face interview.

I hope you don’t misunderstand me – I’m really excited about your tool, and I’m just thinking that it has a huge potential to convey even more information about user decisions 🙂

Cheers,

Matty

Hi Dave, and thanks for a great article!

We’ve used Treejack since its initial launch early this year and have had good experiences with it, some of which we shared in April:

Using Treejack to test your website structure

http://www.volkside.com/2009/04/using-treejack-for-ia-testing/

There is also another tool in the market called C-Inspector that is specifically designed for tree testing. We posted an article looking at C-Inspector in September, including comparisons with your Treejack tool:

Test your information architecture using C-Inspector

http://www.volkside.com/2009/09/test-your-ia-using-c-inspector/

Both articles discuss screen-based IA testing more generally, too.

Cheers, Jussi

Ps. Matthias makes some great points above that are worth taking into consideration in future development.

I’ve used a similar technique in the past and also called it tree testing. Since I wasn’t aware of any software tool for it, I created the tree using a set of Windows nested folders. It was easy to create, cost nothing, and was an interface users were familiar with and found easy to navigate. The only downside was that it took more effort to analyze the data than your tool would. However, I approached it as more of a qualitative method than quantitative, so I didn’t test large numbers of users at once and did it iteratively. Given the choice between what I did and your tool, I’ll probably use your tool next time. But if anyone is on a limited budget, they may find the folder method an acceptable substitute.

Hi Dave, Donna developed this technique when working with us, and we’ve therefore been using it for many years.

While an online tool certainly makes life easier, it’s also very simple to use this technique with handwritten filing cards. An hour to create the cards, and you can fit in several rounds of testing in just two days. Makes a huge difference in refining the IA before moving on to the wireframes…

I have done similar exercises in the past, and I think this would be a much more efficient tool for that purpose. In addition to the task-based exploration, I also begin each testing session by simply asking the participant to look at each primary (and sometimes, secondary) navigation category and tell me what they *think* they would find underneath each. it is important to do this before they have developed any preconceived notions as a result of the testing.

I’ve used Treejack for several projects now and have found it very insightful. I find it complements card sorting activities very well and also gives a good indication of potential cross-references between pieces of content. I love the fact that by using a consistent set of tasks and simply revising the tree you can measure improvement quantifiably.

Matty:

1. I definitely agree that task phrasing is critical in tree tests, especially the avoidance of “giveaway” words. The problem we’ve seen with more than 10 tasks is that participants learn the tree structure by browsing it during early tasks, making certain later tasks easier. While randomising the task order helps, there’s still a learning effect that unnaturally skews the results (especially if your typical users are not habitual or frequent visitors). If the tree is small, we go with about 8 tasks; if it’s very large, we may go as high as 12. But our findings are admittedly limited so far – what’s needed is more experimentation with the technique to see what its real limits are.

2.) Showing user paths graphically – Yes, results that are more graphical (like what you suggest and a few other ideas we’re playing with) are definitely on the wish list. Being able to show the tree and highlight its strong areas and weak areas (either per task or across the whole test) would be a great way to show what’s important at a glance.

3.) Playback of incorrect decisions – That’s one we haven’t thought of (to my knowledge), but I like its forensic approach – that would help tease out the “why” that we’re currently missing in Treejack and several other online tools.

Good thoughts here. Thanks!

Brian: Love the idea of using a nested folder structure in a file browser – use the simplest tool that gets you the answers you’re looking for. Yes, you give up some sophistication, but you may not always need that.

James: I ran some paper-based tests before we had Treejack, partly to get results, and partly to get more familiar with the technique before designing a tool to automate it. While I found the paper version more tedious to prepare than its online counterpart, I really liked how I was able to hear the participants’ thought processes (i.e. think aloud) and ask about their more curious departures from the “correct” paths. I think it might turn out like card sorting, where both paper and online methods will get used depending on what the tester is looking for. What you mention about doing several rounds in 2 days – exactly! That kind of quick turn-around is what I like best about tree testing.

Stephanie: That idea of asking participants what they expect to see before doing the test – I’ve done that during standard usability tests, but usually when we didn’t have content behind a clicked link (“We don’t have that page available, but what would you expect to see?”), or at the end of a test, for links that they never clicked (“Tell me more about this one.”).

Probing expectations before the test starts – I agree that this can reveal useful information, but I also wonder if it affects the results of the tasks that follow? That is, if we prompt the participant to think a bit about each top-level heading up front (before they have a task to do), might this improve their performance (or even hurt it, depending on how well their preconceptions matched up with their later thinking)? That would be an interesting study to do.

dan

thanks for the article. i started reading it before i looked at your bio and was thinking to myself, “doesn’t this guy know about treejack?”

ha. i guess so.

i’ve started using the tool recently and am pretty pleased with it. keep up the good work.

very inspiring!

we’re considering using this tool for our incoming study~

Interesting article for testing trees.

The tree organization testing problem might be solved by implementing a splay binary tree algorithm approach. An example is shown on my site (Binary-Tree Demo), but the application only runs on Macs.

http://sperling.com/freeware.php

In any event, I propose a “splay menu system” that would have the most popular links appearing in order (top/down, left/right, whatever). Subsequent menus would appear likewise but further down the link tree.

The upside of using this technique would be the most popular pages would appear highest on the menu system. The downside is that the menu system would not be static, but instead always changing dependant upon visitor’s interest and selection.

I would guess that visitor’s interest will focus on certain topics and lessen the amount of menu change in a very short time. However, when new topics are provided, then the menu system would again change to adapt.

This approach would be automatic and allow the visitor to do the menu testing.

Cheers,

tedd

hi dave,

great article! i had some really good ideas while i was reading your article. so i am looking forward to solve a problem i was carrying with me for some time. but i was thinking about some questions also. its about test persons.

i was thinking about your conclusion: doing a better IA, not a different! (btw: a great and easy argument!). how many persons do you test by asking 10 cases? i assume you need a high enough number of test persons to have significant and reliable findings.

if you want to have a comparison between the old structure and a new one, do you choose the same persons? i think you need different groups of test persons, because the first tested group remembers the ols structure and this would falsify the findings…

how much time do you spend in finding test persons that are part of the defined target group? we often use colleague for “discounter testing”.

cheers,

rene

Tedd:

Interesting idea about building dynamic menus based on popularity. Do you know any sites that have tried it?

If the site had one main type of user, I can see this working well. If there were several types of users, I wonder if that causes problems – that is, the popularity of certain sections and pages may vary greatly between user type A and user type B.

If the site had a high return-visitor rate (e.g. an intranet, where a given user visits it daily), we could also try a per-user popularity rating, so my site would have a different organisation than yours. Hmm…

Rene:

In general, we try to get at least 30 users of a given type – that seems to be the general statistical number to see medium-sized effects in any study.

However, I prefer to get about 100 users per type if I can. That way, you can spot outliers more easily. For example, if you test 30 people, and 1 chooses a given incorrect topic, that can be safely ignored. But what about 2 or 3 users? At some point those numbers become meaningful.

If I test 100 users, now I can safely ignore topics that get small numbers of hits (say, less than 5). Every time we run a tree test, we get a few seemingly bizarre choices, and it’s good to be able to ignore them with confidence.

Reusing users: Just like traditional usabilty testing, we aim to use fresh users each time, for just the reason you mention.

Time spent recruiting: For this type of unmoderated testing, where the participant can do it remotely on their own time, it’s usually not hard to recruit users. Usually our clients have lists that we can use. Like usability testing, we may also do discount testing with surrogate users, but we always avoid people who know too much (e.g. the project team) or are too different from our target users.

Hope this helps!

Great article thanks Dave. We’ve traditionally used a lot of card sorting but were looking to use task based testing and this looks like the way to go – we’ll definitely be using this in upcoming projects. Like you mentioned, we find it really important in an IA redesign to demonstrate that the new proposed IA is an improvement on the old one and this looks like a fast and efficient solution, especially for testing remote users.

Great article and a great little piece of software! This makes the tedious chore of IA testing a lot faster and more scalable.

Interesting Article. A quick and dirty way of doing this is in Dreamweaver.

If you build your SiteMap in Dreamweaver, it will create a set of interlinked pages and each page will have link to the ‘children’. A user can then click-through (and back-track when needed). This will help you test your IA.

At MindTree, we use this often. Once the IA is frozen, we then use the same pages to develop clickable wireframes.

Hey Dave! Great article. I am keeping it to refer to so I can use it when I have the opportunity.